In view of this status quo, science fiction writers believe that there is no need to discuss the definition itself too much at the moment, “because for a concept that is in the continuous tense, the definition means limitation. When the metaverse is not fully formed, a There will be a thousand metaverses in the eyes of a thousand people, and everyone in it will be like a blind person touching an elephant, with completely different perspectives, appeals and perceptions.”

There are also many academic discussions on the metaverse, Jing Jing, an associate professor of Minzu University of China, wrote in the paper of the 19th annual meeting of the Chinese Society for Chinese and Foreign Literature and Art Theory, “Since William of Occam and the philosophers of medieval nominalism, the ontology of the world has Sex has at least two layers, one is the physical world we can perceive, and the other is the countless possible worlds constructed by various symbols. In the world of symbols, there have always been two basic divisions, that is Natural Sciences and Humanities.”

It is believed that when entering the world of the metaverse, the difference between the inside and outside of the symbol designation disappears, and it returns to the neutral state in the context of nominalism. It points to a constructed parallel world, and it also points to people’s conceptual consciousness, and it also means that the symbol The relationship between itself and the human itself is infinitely reduced.

It seems to be very abstract, simply grasping an easy-to-understand keyword “parallel world”, to some extent, the metaverse can be understood as “world creation”, using symbols to construct countless virtual reality worlds that are very similar to the physical world experience. “It means digitizing things in reality and copying them into a parallel world. Each of us can have a digital virtual double – Avatar. This double can do anything in the digital scene, and it will in turn affect reality. The world, commonly known as breaking the dimensional wall. But this is only the most crude description, and each noun can branch into endless details.”

This article hopes to understand the working principle of “world creation” behind the metaverse from the perspective of technology and through specific cases, so as to reasonably imagine the future possibility of the metaverse.

Before we start, we can ask ourselves a question – how do we know what Mars looks like?

How did we establish our understanding of Mars without having seen it with our own eyes and without the accumulation of knowledge in astronomy and astrophysics?

How to “build” Mars?

表示。

An important source may be various related science documentaries. So how did such simulated images in science documentaries come about? You know, many scenes in space cannot be filmed all the way through. Such as the science documentary “Hello! “Mars”, the production team needed to accurately restore the fire detection process at that time, and this process could not be photographed from all angles. So how exactly do you structure this process?

“In this case, every shot and every point must be accurately analyzed to objectively and truly reflect scientific data.” China Central Radio and Television Station “Hello! Wang Zijian, technical director of the “Mars” documentary, told The Paper (www.thepaper.cn). According to Wang Zijian, this precise restoration process is completed through Omniverse, “We use the USD model, the advantage of which is that it is completely based on scientific computing and can be compared one-to-one with real-world scenarios, such as Mars rovers and probes. The bureau obtained the desensitization data of the Mars rover. At that time, the data was only point cloud data. Omniverse formed a polygon model with topology based on the point cloud data, and then stored it in USD and turned it into a digital asset, which is very convenient and can be used directly in the production of documentaries. .”

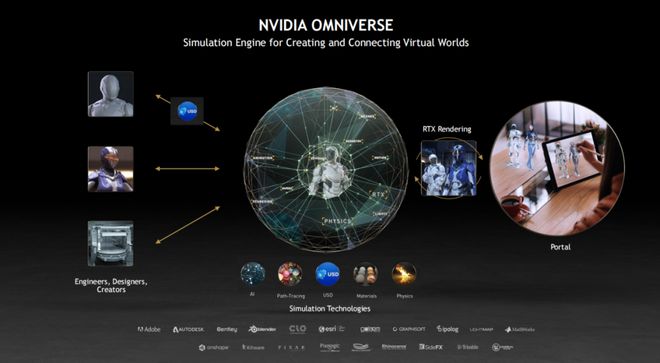

Omniverse is a real-time 3D design collaboration tool launched by NVIDIA in 2019. Huang Renxun once introduced at the 2021 GTC conference, “Omniverse allows individuals to simulate and create a shared 3D virtual world that follows the laws of physics.” USD (Universal Scene Description) is the basis of Omniverse.

USD first came from animation company Pixar (Pixel). In the animation production process, different divisions involve different design software tools, so when working together, tedious work such as format conversion is required. Later, Pixar proposed a unified scene format USD and made it open source in 2016, so that 3D content produced by different software can use the USD format uniformly.

“Take PPT as an example, maybe 5 people open the same PPT, the public data stream, one person’s changes can be seen immediately by the other person. Based on the USD format, in a three-dimensional scene, the changes made by one person can be seen by the other person immediately. , to facilitate communication and collaboration.

An example of the actual work content, “In the early stage, you need to use 3D software to do it. After you finish a model, you need to map it. Finally, it becomes a scene. After rendering, it becomes a photo output. For example, the storage format of 3ds Max is 3DS. However, the assets formed by each software can only be The meaning of your own project files and assets means that you can interact with each other. If each company has its own file format, it is very difficult to interact. What will be the result? That is, the software model of A is well done, but the software B has to be modified after it is opened. , the modified thing A software needs to be modified again when it is opened again.”

The USD model is based on a standardized data structure, and the quality of presentation can be guaranteed to be consistent, that is, the lighting, color, and material performance of the entire model itself are unified, so collaborative creation can be achieved on this basis.

“If digital assets cannot be standardized, in fact, there is no way to achieve digital collaboration. The so-called collaborative work is empty talk.”

How to understand the “digital assets” here, and why is digital collaboration so important?

Why are digital assets, digital collaboration and real-time ray tracing important?

According to Wang Zijian’s explanation, if it is saved in USD format, it can be reused by multiple software, so the files in this format are called digital assets. Digital assets also have a second function to iterate, and this iteration is not destroyed. “For example, if I build a lunar rover, this lunar rover is the first version. If I build a second version, at least one must be saved and then destructively modified. But USD can avoid this problem, that is, on the original basis Version control is carried out on the ‘small revision’. In this way, the preservation of digital assets will be reused, and there is also the possibility of traceability.”

Then on this basis, there is the possibility of digital collaboration.

In the original traditional mode, under the entire linear workflow, the visual director could not see the complete film until the last moment. If there is any change, everything must be adjusted accordingly, or even changed from scratch. In this way, the loss is very large, and the direct result is that the production cycle is significantly elongated, and the cost of personnel and equipment is also accompanied by a sharp increase in the entire cost.

And based on the real-time iterative model, the most important change is the realization of “what you see is what you get”. That is to say, the visual director can observe every step, see how various elements such as models, scenes, and atmospheres are put together, and at the same time, modify the script in turn for the existing real-time rendering. This is not just a task mode of simply making and uploading, but an iterative creation mode that relies on each other.

Furthermore, with such technical support, real-time changes and changes in creative ideas can be directly reacted, iterated and presented in real-time by related types of work, which is also the meaning of digital collaboration.

Another important technological advance is real-time ray tracing. In 2018, NVIDIA released real-time ray technology, which combines real-time ray tracing and AI for application.

“Ray tracing is a theory, and it is only based on ray tracing that can fully simulate the real world and simulate the rendering of the real world. In 2018, NVIDIA launched real-time ray tracing technology, which to some extent forced the industrial process, and many couldn’t do it before. anything can be done.”

Specifically, say a beam of light hits a bottle, such a shot might have five seconds. According to the CCTV standard, 50 photos need to be rendered in one second, five seconds is 250 photos, and if one photo is rendered for eight hours, it is 2000 hours. If at this time the director finds that the camera is in the wrong place and needs to do it all over again, then 2000 hours will be useless.

After real-time ray tracing technology, 2000 hours will become real-time, and fifty images can be directly rendered in one second. This is related to the previous traditional linear work mode, “Why was it linear before? Because everyone is concerned about how to reduce communication costs. But now there is real-time rendering technology that can be viewed directly on site, so I dare to talk about real-time collaboration, and the cost is suddenly reduced. In the past, it might take three or four studios to make a film. After half a year of hard work, the director ran back and forth between several studios. Now the production has suddenly become flat, and dozens of people spend their time in one environment. The film was made in a month or two.”

“Real-time ray tracing technology allows us to see a new possibility, it may add 200-300 billion US dollars to the creative market, and promote designers to use this technology to make his work. With graphic visualization and AI, later It was found that the physics engine and XR technology that we had done before could also be combined, and then the idea of making this technology into a platform was further derived. The Omniverse released later was the result of various rendering technology improvements.” Omniverse Business Development of NVIDIA China Manager He Zhan said.

For the latest technological progress, “On this basis, it is now further enhanced, making real-time rendering of high-quality photorealistic results higher and higher, seamless connection between multiple renderers, one button for one scene, such as putting Real-time is changed to Penetrating. Finally, it is the simulation of physical simulation and mechanical unit group, which is equivalent to controlling the real world through the virtual digital world.”

GIPHY App Key not set. Please check settings